Currently, we’re all aware that we must secure the user’s accesses more than ever, but if is simpler to achieve this goal for resources managed in the cloud, is also possible to remain a similar approach when we have resources on-premises as well. Nowadays, our users became more productive than ever, and they want to access to their resources faster even from the corporate device, or personal tablet or managed smartphone. Our goal is always promoting this productivity while securing these requests. This is why e-mail became the “opened door” that might be protected, no matter how is accessed.

Azure AD Premium delivers a very useful feature to protect the user’s identities through the implementation of Conditional Access policies. These policies intend to protect the user identity and to control the behavior of a user when it tries to access to a specific (or multiple) app(s) managed inside of Office 365.

But what if we want to protect the accesses from our users to a on-premises application such as OWA? (We found many scenarios where organizations don’t have all user mailboxes hosted in Exchange Online). This post intends to provide a quick guidance over this scenario.

1- Implementation of Azure AD Application Proxy

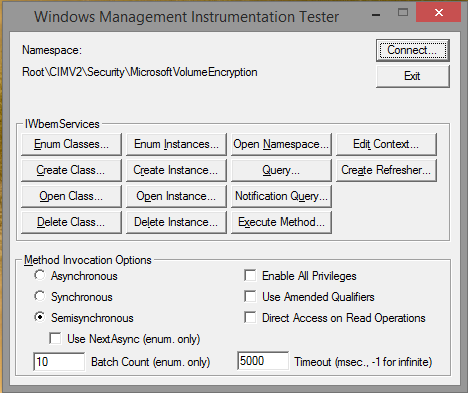

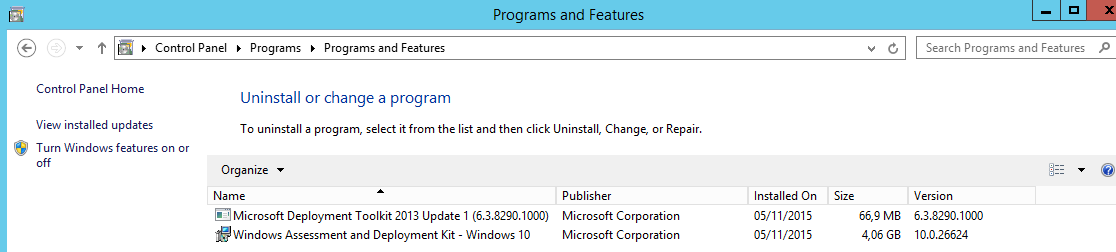

Azure AD Application Proxy comes into this scenario to deliver the user’s requests from the cloud (e.g.: my apps portal). The implementation is quite simple. From the Azure AD portal, we can download the msi and what we need to perform is just an installation of a dedicated pool of servers (to provide high availability).

In the end of the process, when we access to the Azure AD portal, we must get the server(s) visible and the status must remain “Active”.

2- Application registration process

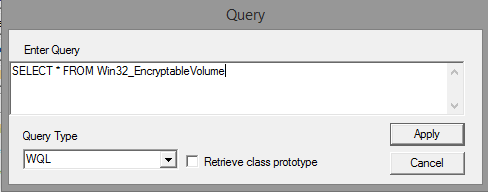

From the Azure AD portal, we can create the application. For this process is important to get the OWA internal url. The Azure AD App Proxy will provide the “translation” of the url to deliver the requests from the Office 365 service.

To achieve that, from the previous image, select the option “+ Configure an app” and follow the required fields. The external url will be “provisioned” automatically following the internal url options previously set.

In this phase, all we know is that we’ll have a dedicated server (Azure AD App Proxy) that will be responsible to provide the routing of requests to an internal url (OWA internal url) and will delivered to a secured channel over a public url.

3- Activation of User’s SSO

To deliver a better user experience for end users, the SSO from Azure AD Connect can be easily assigned. During the process, a computer object will be created inside of our Domain Controller named AZUREADSSOACC.

To achieve the full AAD SSO experience, a couple of GPO settings must be configured. Those settings might be slightly different depending on the browser, but for this demo, will cover for Edge (chromium-based) scenario.

First, search on the web for the MS Edge ADMX files, include the files inside of the AD management and configure the following settings:

User Configuration > Administrative Templates > Microsoft Edge > HTTP Authentication

- Specifies a list of servers that Microsoft Edge can delegate user credentials to

- Configure list of allowed authentication servers

For both settings, the value is the same – the url of SSO – https://autologon.microsoftazuread-sso.com

For more, details, access to the following URL.

4- Create the Conditional Access Policy

Since we already have the application registered, the Conditional Access policy just to need the configured app in the step 2, and the option to require MFA.

5- User experience

Since we’ve all required configurations provided, the user experience of the users will be:

a) the user access to the myapps portal to access to the published OWA (available URL can be deactivated) without requiring AAD logon (through the implementation of the SSO experience only for managed devices). We can add the myapps app to require MFA as well.

b) the user clicks on the available app (OWA). The Conditional Access policy takes the control and require the end user to answer against an MFA request.

c) the internal url is redirected using Azure AD App Proxy and the user can access to his mailbox securely using Azure MFA.

d) OWA page

Note: All configuration tasks are being stored inside of the Azure AD App Proxy Event Viewer and can be accessed for troubleshooting purposes from the following path: Event Viewer > Applications and Services Logs > Microsoft > AadApplicationProxy > Connector > Admin

/ Fábio